By Annie Young

Computer vision, or the method of giving machines the ability to process images in an advanced way, has been given increased attention by researchers in the last several years. It is a broad term meant to encompass all the means through which images can be used to achieve medical aims. Applications range from automatically scanning photos taken on mobile phones to creating 3D renderings that aid in patient evaluations, on to developing models for emergency room use in underserved areas.

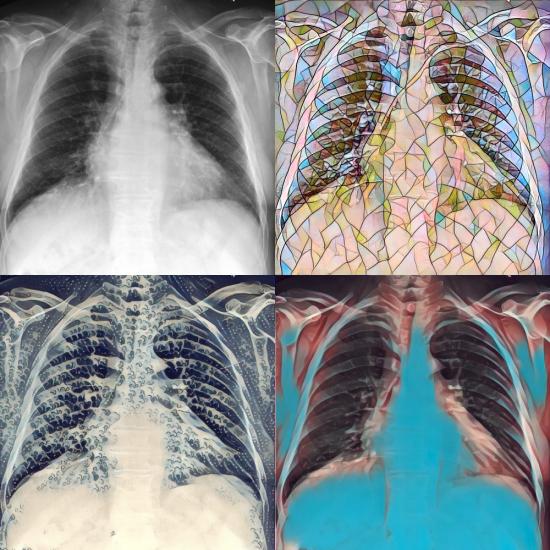

As access to a greater number of images is apt to provide researchers with a volume of data ideal for developing better and more robust computer vision models, a collection of visuals that have been enhanced, or scrubbed of identifying details and then highlighted in critical areas, can have massive potential for researchers and radiologists who rely on photographic data in their work.

This week, the Laboratory for Computational Physiology (LCP), a part of the Institute for Medical Engineering & Science (IMES) led by Professor Roger Mark, launched a preview of their MIMIC-Chest X-Ray database, a repository of more than 350,000 detailed chest X-rays gathered over five years from the Beth Israel Hospital. The project, like the lab’s previous MIMIC-III, which houses critical care patient data from over 40,000 ICU stays, is free and open to academic, clinical, and industrial investigators via the research resource PhysioNet. It represents the largest selection of publicly available chest radiographs to date.

With access to MIMIC-Chest X-Ray, funded by Philips Research, registered users and their cohorts can more easily develop algorithms for fourteen of the most common findings from a chest x-ray, including pneumonia, cardiomegaly (enlarged heart), edema (excess fluid), and a punctured lung. By way of linking visual markers to specific diagnoses, machines can readily help clinicians draw more accurate conclusions faster and thus, handle more cases in a shorter amount of time. These algorithms could prove especially beneficial for doctors working in underfunded and understaffed hospitals.

“Rural areas typically have no radiologists,” said Alistair E. W. Johnson, co-developer of the database along with Tom J. Pollard, Nathaniel R. Greenbaum, Matthew P. Lungren; Seth J. Berkowitz, Director of Radiology Informatics Innovation; Chih-ying Deng of Harvard Medical School; and Steven Horng, Associate Director of Emergency Medicine Informatics at Beth Israel Deaconess Medical Center. “If you have a room full of ill patients, and no time to consult an expert radiologist, that’s somewhere where a model can help.”

In the future, the lab hopes to link the X-ray archive to the MIMIC-III, thus forming a database that includes both patient ICU data and images. There are currently over 9,000 registered MIMIC-III users accessing critical care data, and the MIMIC-Chest X-Ray would be a boon for those in critical care medicine looking to supplement clinical data with images.

Another asset of the database lies in its timing. Researchers at the Stanford Machine Learning Group and the Center for Artificial Intelligence in Medicine & Imaging released a similar dataset last week, collected over 15 years at Stanford Hospital. The MIT LCP and Stanford groups collaborated to ensure that both datasets released could be used with minimal legwork for the interested researcher.

“With single center studies, you’re never sure if what you’ve found is true of everyone, or a consequence of the type of patients the hospital sees, or the way it gives its care. That’s why multicenter trials are so powerful. By working with Stanford, we’ve essentially empowered researchers around the world to run their own multicenter trials without having to spend the millions of dollars that typically costs.”

Similar to MIMIC-III, researchers will be able to gain access to MIMIC-Chest X-Ray by first completing a training course on managing human subjects and then agreeing to cite the database in their published work.

“We’re moving more towards having a complete history,” said Johnson. “When a radiologist is looking at a chest x-ray, they know who the person is, and why they’re there. If we want our models to be implemented and make radiologists’ lives easier, the models need to know who the person is, too.”

Click here to read the associated paper.

*Republished in MIT News.